The Conspiracy Theorists Control Our Public Sphere

Explaining the rhetorical tricks conspiracy theorists use against the public

How did the US government get taken over by conspiracy theorists? Conspiracy is a really tricky rhetorical trick because it makes heroes out of believers and doesn’t allow evidence to disprove the narrative.

“They don’t want you to know the truth.”

“They’re all liars. I’m the only one you can trust to tell you the truth.”

“Why won’t they even let us ask questions?”

“Do your own research.”

If you’ve heard any of these phrases from news media, politicians, or friends, then you’ve been attacked with conspiracy rhetoric. Conspiracy is a powerful rhetorical trick responsible for insurrection, vaccine rejection, and climate catastrophe (among other tragedies). It’s also a lucrative and successful media and politics strategy.

Like other rhetorical tricks, conspiracy is a form of anti-democratic manipulation. It works insidiously by appealing to outrage and curiosity, then burrows into your thoughts and refuses to leave.

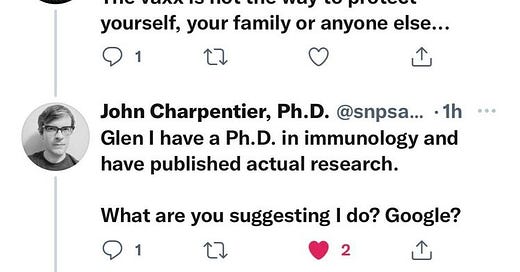

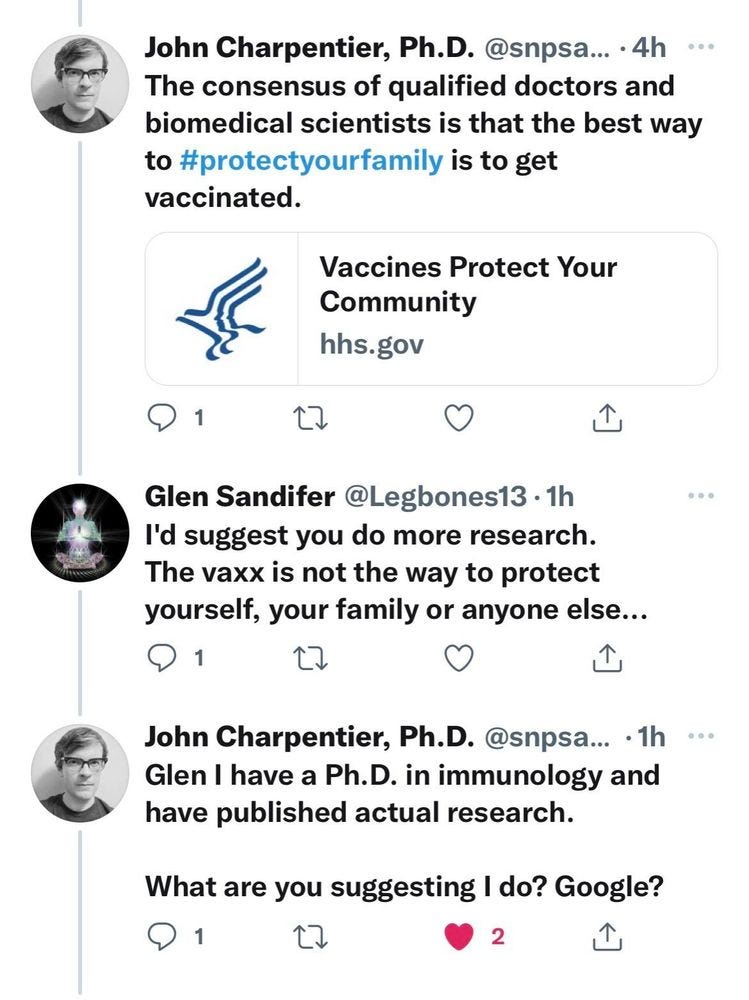

Conspiracy rhetoric has the appearance of an argument (a claim supported by evidence and reasoning), but unlike an actual argument, it can never be proven true or false. This is what separates a conspiracy theory/narrative/lie from other kinds of narratives or theories. While conspiracists often urge people to “do their own research,” actual research is the last thing that conspiracists want. Actual research is conducted by people who are willing to follow the evidence and will accept whatever the evidence proves or disproves. Conspiracy doesn’t work that way at all.

Conspiracy rhetoric is like a “self-sealing” tire that has some kind of magic goo in it that prevents your tire from popping when you run over a nail. Conspiracy rhetoric is a “self-sealing” narrative that prevents it from popping when confronted by facts, logic, or evidence.

“Conspiracy rhetoric is part con, part cult, and part live-action game—and that makes it very well designed to take advantage of our innate cognitive, social, and emotional vulnerabilities.”

A narrative that cannot be punctured by logic or evidence is inherently dangerous because it asks audiences to trust their feelings rather than facts, and it gives autocratic power to the person who spreads the conspiracy.

As I explained in my book Demagogue for President: The Rhetorical Genius of Donald Trump, conspiracy rhetoric “creates a perverse and powerful legitimacy for anyone who uses it.” First, conspiracy theorists claim to be wiser than others because they aren’t duped by the conspiracy. Because they’re not fooled, and everyone else is, they elevate themselves into a position of power and authority. Second, since they’re not bound by logic or facts, conspiracy theorists can use their authority to make accusations against anyone or anything that they want. This allows manipulators to wield conspiracy like a cudgel, as a form of ad baculum threat (a threat of force or intimidation used to silence opponents).

More practically, wielding conspiracy theory can make a manipulator a lot of money. Take notorious conspiracy theorist Alex Jones for example, his business model is to build a huge audience by using conspiracy and outrage to pretend that he is the only one who tells the truth about how the scary world actually works. He then sells emergency preparedness and snake oil items designed to appeal to his conspiracy-addled audience, making a reported $800k a day off of outrage and conspiracy. Likewise, according to the January 6th Committee, Trump used his “big lie” election denial conspiracy to make $250 million (and counting) off of his supporters.

Conspiracy rhetoric is part con, part cult, and part live-action game—and that makes it very well designed to take advantage of our innate cognitive, social, and emotional vulnerabilities. According to social psychology research, “conspiracy beliefs are consequential, universal, emotional, and social,” meaning that most of us believe in one or two of them, we act in accordance with those beliefs, we experience social benefits from our beliefs, and we are emotionally and ego-involved in our conspiracy beliefs.

People who believe in conspiracies do not see themselves as victims; they see themselves as heroes. They want to believe because believing in the conspiracy makes them powerful— they believe that they see the world clearly (they’re not duped by the conspiracy) and they have an important role to play (they can “do their own research” and investigate, expose, and stop the conspiracy). That kind of heroic positioning is mighty enticing, especially when it comes with social rewards—a community of believers/disbelievers grows around the conspiracy, and status rewards can be earned for believing, investigating, and exposing the conspiracy.

Manipulators use a toxic combination of conspiracy, fear appeals, and outrage to get our attention. According to research by cognitive scientists, being exposed to conspiracy theories can flood our brains with stress hormones that take over our ability to reason by activating the brain’s natural “fight or flight” response (called an “amygdala hijacking”). Unfortunately, we’re all vulnerable to conspiracy clickbait. Our brains are attracted to new information, to secret information, to outrage or other negative emotional words, and to polarizing in-group and out-group gossip. Our brains do a Scooby Doo “huh” whenever we hear or read conspiracy. It forces us to pay attention. It’s a trap.

I admire your writing on this topic.

I'm going to risk sharing a frustration on the limits of our language, though, while hoping you don't take this as any form of criticism of you or this piece.

The simple term "conspiracy theory" has become overloaded in a way that distorts our thinking and disrupts our ability to communicate. You describe well the dangers of conspiracy theories when they race ahead of "facts, logic, or evidence." But we've come to lack a term for theories about conspiracies legitimately exploring the unknown, not seeking to exploit rhetorical tricks but instead just discover.

Every reveal of actual conspiracy, such as Watergate, Iran-Contra, MK Ultra, the October Surprise, etc., has been advanced by a theory of the case. The theory was required and not necessarily ill-intentioned.

Now characters like Alex Jones define the term, stretching us way beyond the simple Webster Dictionary meaning of these words. What would we call a Craig Unger who spent over thirty years pursuing the October Surprise story before finally publishing "Den of Spies," an impressive evidentiary exploration of past conspiracy? Craig is not an Alex Jones. We could call Craig a journalist or a reporter, but he was definitely considered a conspiracy theorist until recent years and that does not provide him good company.

What concerns me about this is all the Craig Ungers who don't endure. The stigma of conspiracy theory drives them away from conspiracy realities lacking adequate evidence still discoverable. Then we're just letting the scoundrels maintain cover.

I kick around alternative terms in my head, such as conspiracy barker for Alex and conspiracy analyst for Craig, but I'm just a rando blogger and not likely to be in charge of language any time soon.

Anyway, thanks for allowing me to share my frustration.

Well laid out primer on conspiratorial thinking and its meme-like capabilities. I ordered your book!